fx

fx is my favorite terminal-based JSON tool. Let's look at how it lets us interactively explore, query, filter, and edit JSON. To wrap things up, we'll compare it to jq, and I'll explain why I prefer fx.

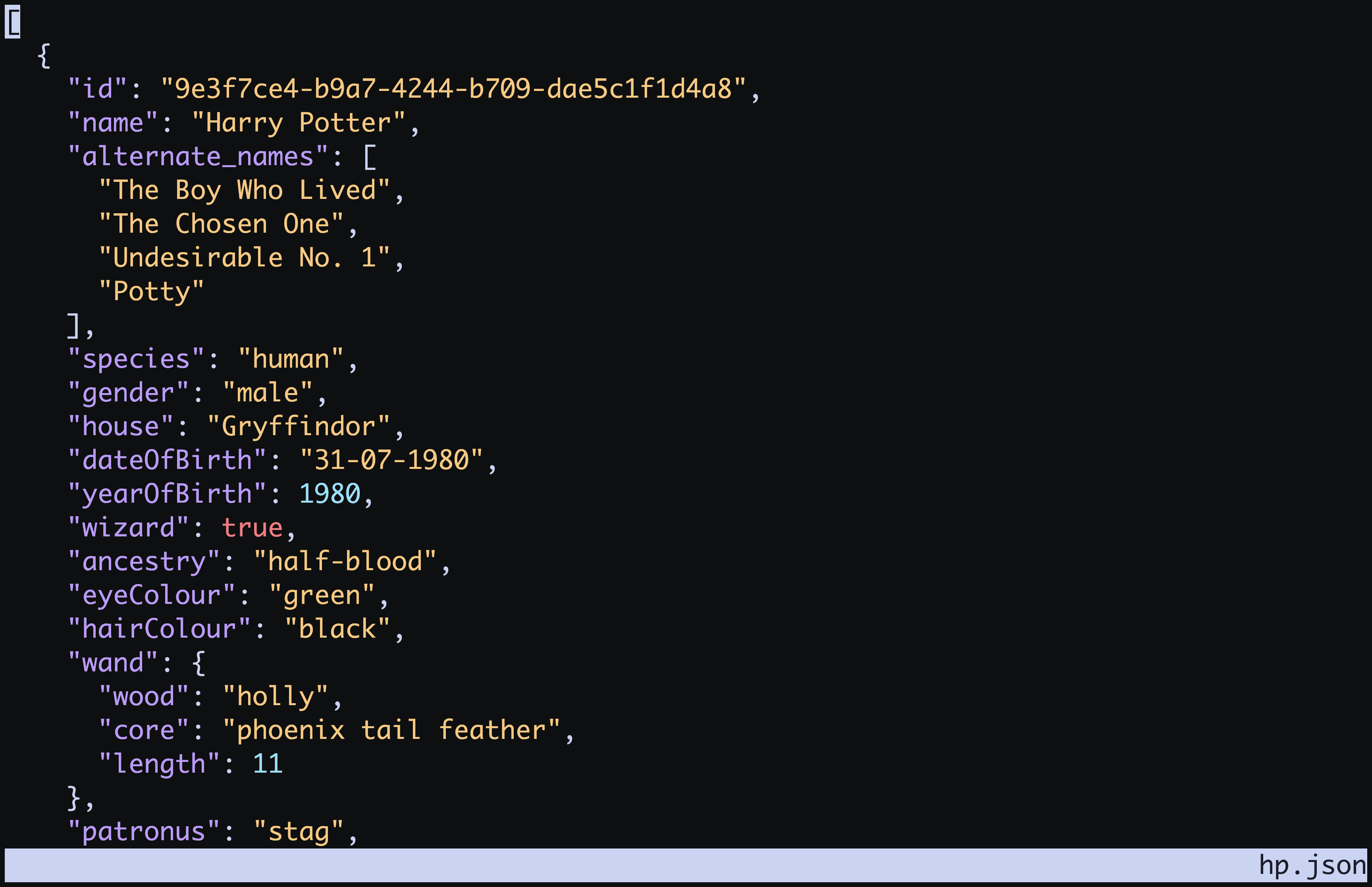

To show off fx, we'll need some JSON. We'll start by fetching JSON of Harry Potter characters.

curl https://hp-api.onrender.com/api/characters > hp.jsonIf we cat hp.json, we see that it is a single line of JSON (formatted for computers, not for human readability).

Explore interactively

Running fx with the JSON file's name will let you explore it interactively with human-friendly formatting.

fx hp.json

You can navigate using up and down arrows or vim-style j/k navigation. You can search with / and go to previous/next matches with n/N

Note that fx shows the current JSON path in the bottom left as you move around. Press . to enter "dig" mode to fuzzy-search for a path.

Pressing y lets you copy the value, path, or key under the cursor.

q, ctrl-c or esc will exit fx.

fx --help will show you how to collapse/expand nodes and the rest of the shortcuts.

Querying and filtering

fx querying will feel like the JavaScript map/filter/etc. you already know. fx also provides some optional syntactic-sugar.

Here are some example commands with their non-sugary and sugary versions.

# get a list of wand woods

fx hp.json '.map(x => x.wand.wood)'

fx hp.json .[].wand.wood

fx hp.json @.wand.wood

# wand woods without blank wood names

fx hp.json '.map(x => x.wand.wood).filter(x => x)'

fx hp.json .[].wand.wood '.filter(x => x)'

fx hp.json @.wand.wood '.filter(x => x)'

# living blonde wizard names

fx hp.json '.filter(x => x.alive && x.hairColour === "blonde" && x.wizard).map(x => x.name).sort()'

fx hp.json '.filter(x => x.alive && x.hairColour === "blonde" && x.wizard)' @.name sortBuilt-ins and custom functions

sort is a built-in function (there's also uniq and more). fx also allows you to define your own functions in ~/.fxrc.js (or a local .fxrc.js).

Using .filter(x => x) to remove blanks is common enough that I've written a custom present function in my ~/.fxrc.js

// reject any falsy/blank values from an array

global.present = (arr) => arr.filter((x) => x);To use a custom function or built-in function, you add it as an argument to the fx command.

# unique wand woods

fx hp.json @.wand.wood sort uniq presentBecause you can run any JavaScript in your custom functions, you can write functions to do things like filter out secrets or sensitive information.

Let's imagine we never want to see Voldemort's name, and we never want to leak our Stripe API key while recording a screencast. We can avoid both disasters by building a confidential function.

// define secrets and their replacements

const secrets = {

"Lord Voldemort": "He-Who-Must-Not-Be-Named",

[process.env.STRIPE_API_KEY]: "********",

};

// replace secrets with their replacements

global.confidential = (x) => {

let stringVersion = JSON.stringify(x);

Object.keys(secrets).forEach((secret) => {

stringVersion = stringVersion.replaceAll(secret, secrets[secret]);

});

return JSON.parse(stringVersion);

};Here's some example usages:

# without confidential

fx hp.json '.filter(x => x.house === "Slytherin")' '.[3].name'

Lord Voldemort

# with confidential

fx hp.json '.filter(x => x.house === "Slytherin")' confidential

He-Who-Must-Not-Be-Named

# Set a fake stripe API to test marking those as confidential

export STRIPE_API_KEY="Potter"

# without confidential

fx hp.json .[0].name

Harry Potter

# with confidential

fx hp.json .[0].name confidential

Harry ********Modifying JSON

You use map to modify JSON.

e.g., here's how we'd replace blank strings for wand.wood with nulls.

fx hp.json '.map(c => { c.wand.wood = c.wand.wood || null; return c })' > modified.jsonfx versus jq

jq is a great tool. If you already know it, you'll probably be happy continuing to use it (though you can benefit from the interactive parts of fx).

However, learning jq is daunting because it is a DSL. I've found I can read a jq command reasonably easily but struggle to write one from scratch because I haven't internalized their DSL yet. I could learn jq's DSL, but I haven't made it a priority yet.

While jq is a DSL you have to learn, fx is just using JavaScript with some completely optional syntactic sugar.

Compare the following examples:

jq '[.[] | select(.hairColour == "blonde" and .alive == true and .wizard == true) | .name ] | sort' hp.json

fx hp.json '.filter(x => x.alive && x.hairColour === "blonde" && x.wizard).map(x => x.name).sort()'Here's that same fx command with the sugar.

fx hp.json '.filter(x => x.alive && x.hairColour === "blonde" && x.wizard).map(x => x.name).sort()'

fx hp.json '.filter(x => x.alive && x.hairColour === "blonde" && x.wizard)' @.name sortWhile the syntactic sugar lets you be more succinct, you don't have to learn it to use fx successfully.

fx is a power tool with the ergonomics I'm already familiar with. The ability to write custom functions is super compelling. You should give it a try.